Getting Started with ModelArts¶

ModelArts is easy to use for users with different experience.

For service developers without AI development experience, you can use ExeML of ModelArts to build AI models without coding.

For developers who are familiar with code compilation, debugging, and common AI engines, ModelArts provides online code compiling environments as well as AI development lifecycle that covers data preparation, model training, model management, and service deployment, helping the developers build models efficiently and quickly.

ExeML¶

ExeML is a customized code-free model development tool that helps users start AI application development from scratch with high flexibility. ExeML automates model design, parameter tuning and training, and model compression and deployment with the labeled data. Developers do not need to develop basic and encoding capabilities, but only to upload data and complete model training and deployment as prompted by ExeML.

For details about how to use ExeML, see Introduction to ExeML.

AI Development Lifecycle¶

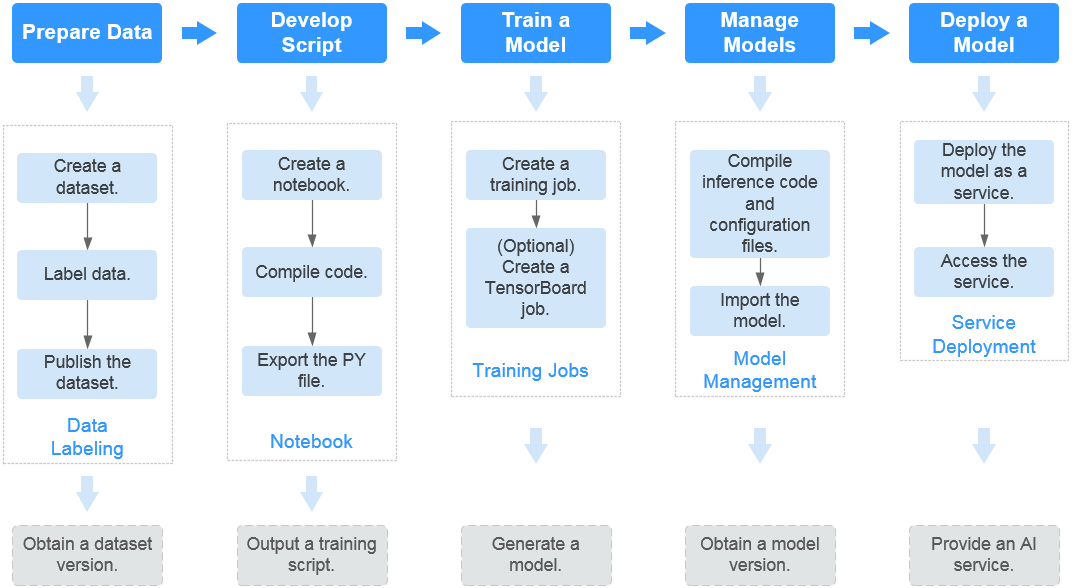

The AI development lifecycle on ModelArts takes developers' habits into consideration and provides a variety of engines and scenarios for developers to choose. The following describes the entire process from data preparation to service development using ModelArts.

Figure 1 Process of using ModelArts¶

Task | Sub Task | Description | Reference |

|---|---|---|---|

Data preparation | Creating a dataset | Create a dataset in ModelArts to manage and preprocess your business data. | |

Labeling data | Label and preprocess the data in your dataset based on the business logic to facilitate subsequent training. Data labeling affects the model training performance. | ||

Publishing a dataset | After labeling data, publish the database to generate a dataset version that can be used for model training. | ||

Development | Creating a notebook instance | Create a notebook instance as the development environment. | |

Compiling code | Compile code in an existing notebook to directly build a model. | ||

Exporting the .py file | Export the compiled training script as a .py file for subsequent operations, such as model training and management. | ||

Model training | Creating a training job | Create a training job, upload and use the compiled training script. After the training is complete, a model is generated and stored in OBS. | |

(Optional) Creating a visualization job | Create a visualization job (TensorBoard type) to view the model training process, learn about the model, and adjust and optimize the model. Currently, visualization jobs only support the MXNet and TensorFlow engines. | ||

Model management | Compiling inference code and configuration files | Following the model package specifications provided by ModelArts, compile inference code and configuration files for your model, and save the inference code and configuration files to the training output location. | |

Importing a model | Import the training model to ModelArts to facilitate service deployment. | ||

Model deployment | Deploying a model as a service | Deploy a model as a real-time or batch service. | |

Accessing the service | After the service is deployed, access the real-time service, or view the prediction result of the batch service. |