Balancing DataNode Capacity¶

Scenario¶

In the HDFS cluster, unbalanced disk usage among DataNodes may occur, for example, when new DataNodes are added to the cluster. Unbalanced disk usage may result in multiple problems. For example, MapReduce applications cannot make full use of local computing advantages, network bandwidth usage between data nodes cannot be optimal, or node disks cannot be used. Therefore, the system administrator needs to periodically check and maintain DataNode data balance.

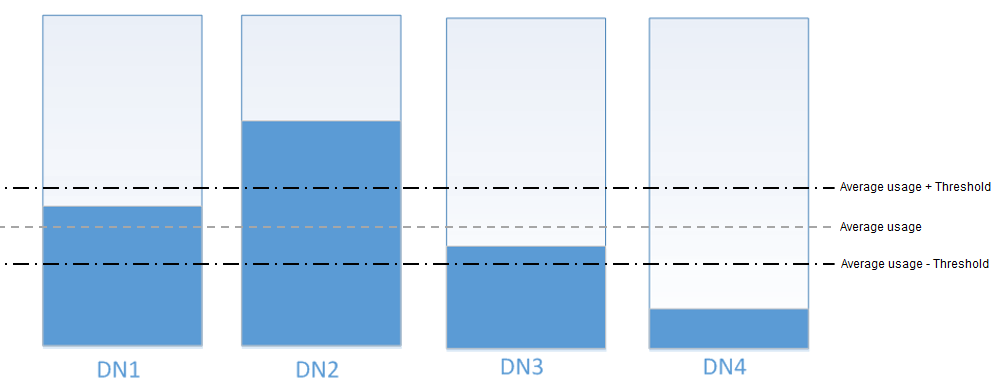

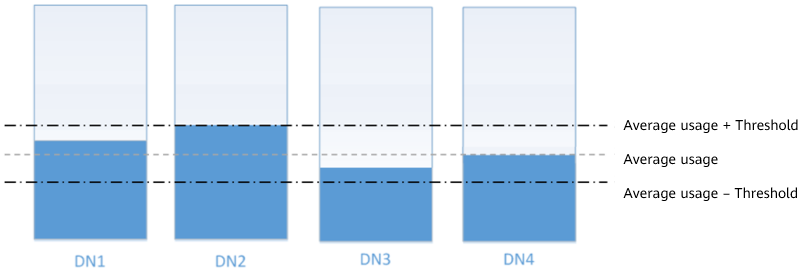

HDFS provides a capacity balancing program Balancer. By running Balancer, you can balance the HDFS cluster and ensure that the difference between the disk usage of each DataNode and that of the HDFS cluster does not exceed the threshold. DataNode disk usage before and after balancing is shown in Figure 1 and Figure 2, respectively.

Figure 1 DataNode disk usage before balancing¶

Figure 2 DataNode disk usage after balancing¶

The time of the balancing operation is affected by the following two factors:

Total amount of data to be migrated:

The data volume of each DataNode must be greater than (Average usage - Threshold) x Average data volume and less than (Average usage + Threshold) x Average data volume. If the actual data volume is less than the minimum value or greater than the maximum value, imbalance occurs. The system sets the largest deviation volume on all DataNodes as the total data volume to be migrated.

Balancer migration is performed in sequence in iteration mode. The amount of data to be migrated in each iteration does not exceed 10 GB, and the usage of each iteration is recalculated.

Therefore, for a cluster, you can estimate the time consumed by each iteration (by observing the time consumed by each iteration recorded in balancer logs) and divide the total data volume by 10 GB to estimate the task execution time.

The balancer can be started or stopped at any time.

Impact on the System¶

The balance operation occupies network bandwidth resources of DataNodes. Perform the operation during maintenance based on service requirements.

The balance operation may affect the running services if the bandwidth traffic (the default bandwidth control is 20 MB/s) is reset or the data volume is increased.

Prerequisites¶

The client has been installed.

Procedure¶

Log in to the node where the client is installed as a client installation user. Run the following command to switch to the client installation directory, for example, /opt/client:

cd /opt/client

Note

If the cluster is in normal mode, run the su - omm command to switch to user omm.

Run the following command to configure environment variables:

source bigdata_env

If the cluster is in security mode, run the following command to authenticate the HDFS identity:

kinit hdfs

Determine whether to adjust the bandwidth control.

Run the following command to change the maximum bandwidth of Balancer, and then go to 6.

hdfs dfsadmin -setBalancerBandwidth <bandwidth in bytes per second>

<bandwidth in bytes per second> indicates the bandwidth control value, in bytes. For example, to set the bandwidth control to 20 MB/s (the corresponding value is 20971520), run the following command:

hdfs dfsadmin -setBalancerBandwidth 20971520

Note

The default bandwidth control is 20 MB/s. This value is applicable to the scenario where the current cluster uses the 10GE network and services are being executed. If the service idle time window is insufficient for balance maintenance, you can increase the value of this parameter to shorten the balance time, for example, to 209715200 (200 MB/s).

The value of this parameter depends on the networking. If the cluster load is high, you can change the value to 209715200 (200 MB/s). If the cluster is idle, you can change the value to 1073741824 (1 GB/s).

If the bandwidth of the DataNodes cannot reach the specified maximum bandwidth, modify the HDFS parameter dfs.datanode.balance.max.concurrent.moves on FusionInsight Manager, and change the number of threads for balancing on each DataNode to 32 and restart the HDFS service.

Run the following command to start the balance task:

bash /opt/client/HDFS/hadoop/sbin/start-balancer.sh -threshold <threshold of balancer>

-threshold specifies the deviation value of the DataNode disk usage, which is used for determining whether the HDFS data is balanced. When the difference between the disk usage of each DataNode and the average disk usage of the entire HDFS cluster is less than this threshold, the system considers that the HDFS cluster has been balanced and ends the balance task.

For example, to set deviation rate to 5%, run the following command:

bash /opt/client/HDFS/hadoop/sbin/start-balancer.sh -threshold 5

Note

The preceding command executes the task in the background. You can query related logs in the hadoop-root-balancer-host name.out log file in the /opt/client/HDFS/hadoop/logs directory of the host.

To stop the balance task, run the following command:

bash /opt/client/HDFS/hadoop/sbin/stop-balancer.sh

If only data on some nodes needs to be balanced, you can add the -include parameter in the script to specify the nodes to be migrated. You can run commands to view the usage of different parameters.

/opt/client is the client installation directory. If the directory is inconsistent, replace it.

If the command fails to be executed and the error information Failed to APPEND_FILE /system/balancer.id is displayed in the log, run the following command to forcibly delete /system/balancer.id and run the start-balancer.sh script again:

hdfs dfs -rm -f /system/balancer.id

If the following information is displayed, the balancing is complete and the system automatically exits the task:

Apr 01, 2016 01:01:01 PM Balancing took 23.3333 minutes

After you run the script in 6, the hadoop-root-balancer-Host name.out log file is generated in the client installation directory /opt/client/HDFS/hadoop/logs. You can view the following information in the log:

Time Stamp

Bytes Already Moved

Bytes Left To Move

Bytes Being Moved