Link to Hive¶

CDM supports the following Hive data sources:

Note

Do not change the password or user when the job is running. If you do so, the password will not take effect immediately and the job will fail.

MRS Hive¶

You can view a table during field mapping only when you have the permission to access the table connected to MRS Hive.

MRS Hive links apply to the MapReduce Service (MRS) on cloud. Table 1 describes related parameters.

Note

Before creating an MRS Hive link, you need to add an authenticated Kerberos user on MRS and log in to the MRS management page to change the initial password. Then use the new user to create an MRS link.

To connect to an MRS 2.x cluster, create a CDM cluster of version 2.x first. CDM 1.8.x clusters cannot connect to MRS 2.x clusters.

Currently, the Hive link obtains the core-site.xml configuration information from MRS HDFS. Therefore, if MRS Hive uses OBS as the underlying storage system, configure the AK/SK of OBS on MRS HDFS before creating the Hive link.

Ensure that the MRS cluster and the DataArts Studio instance can communicate with each other. The following requirements must be met for network interconnection:

If the CDM cluster in the DataArts Studio instance and the MRS cluster are in different regions, a public network or a dedicated connection is required. If the Internet is used for communication, ensure that an EIP has been bound to the CDM cluster, and the MRS cluster can access the Internet and the port has been enabled in the firewall rule.

If the CDM cluster in the DataArts Studio instance and the cloud service are in the same region, VPC, subnet, and security group, they can communicate with each other by default. If they are in the same VPC but in different subnets or security groups, you must configure routing rules and security group rules. For details about how to configure routing rules, see "Adding a Custom Route" in Virtual Private Cloud (VPC) Usage Guide. For details about how to configure security group rules, see Security Group > Adding a Security Group Rule in Virtual Private Cloud (VPC) Usage Guide.

The MRS cluster and the DataArts Studio workspace belong to the same enterprise project. If they do not, you can modify the enterprise project of the workspace.

Parameter | Description | Example Value |

|---|---|---|

Name | Link name, which should be defined based on the data source type, so it is easier to remember what the link is for | hivelink |

Manager IP | Floating IP address of MRS Manager. Click Select next to the Manager IP text box to select an MRS cluster. CDM automatically fills in the authentication information. Note DataArts Studio does not support MRS clusters whose Kerberos encryption type is aes256-sha2,aes128-sha2, and only supports MRS clusters whose Kerberos encryption type is aes256-sha1,aes128-sha1. | 127.0.0.1 |

Authentication Method | Authentication method used for accessing MRS

| SIMPLE |

HIVE Version | Set this to the Hive version on the server. | HIVE_3_X |

Username | If Authentication Method is set to KERBEROS, you must provide the username and password used for logging in to MRS Manager. If you need to create a snapshot when exporting a directory from HDFS, the user configured here must have the administrator permission on HDFS. To create a data connection for an MRS security cluster, do not use user admin. The admin user is the default management page user and cannot be used as the authentication user of the security cluster. You can create an MRS user and set Username and Password to the username and password of the created MRS user when creating an MRS data connection. Note

| cdm |

Password | Password used for logging in to MRS Manager |

|

Enable ldap | This parameter is available when Proxy connection is selected for Connection Type. If LDAP authentication is enabled for an external LDAP server connected to MRS Hive, the LDAP username and password are required for authenticating the connection to MRS Hive. In this case, this option must be enabled. Otherwise, the connection will fail. | No |

ldapUsername | This parameter is mandatory when Enable ldap is enabled. Enter the username configured when LDAP authentication was enabled for MRS Hive. |

|

ldapPassword | This parameter is mandatory when Enable ldap is enabled. Enter the password configured when LDAP authentication was enabled for MRS Hive. |

|

OBS storage support | The server must support OBS storage. When creating a Hive table, you can store the table in OBS. | No |

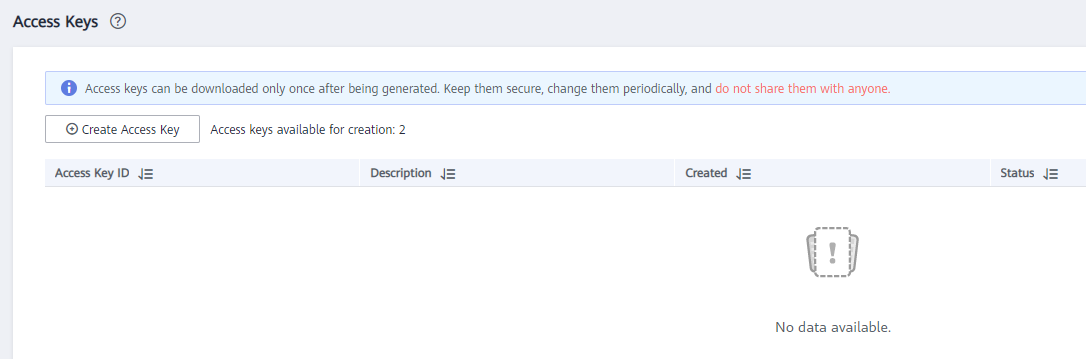

AK | This parameter is mandatory when OBS storage support is enabled. The account corresponding to the AK/SK pair must have the OBS Buckets Viewer permission. Otherwise, OBS cannot be accessed and the "403 AccessDenied" error is reported. You need to create an access key for the current account and obtain an AK/SK pair.

|

|

SK |

| |

Run Mode | This parameter is used only when the Hive version is HIVE_3_X. Possible values are:

| EMBEDDED |

Check Hive JDBC Connectivity | Whether to check the Hive JDBC connectivity | No |

Use Cluster Config | You can use the cluster configuration to simplify parameter settings for the Hadoop connection. | No |

Cluster Config Name | This parameter is valid only when Use Cluster Config is set to Yes. Select a cluster configuration that has been created. For details about how to configure a cluster, see "DataArts Migration" > "Managing Links" > "Managing Cluster Configurations" in User Guide. | hive_01 |

Click Show Advanced Attributes, and then click Add to add configuration attributes of other clients. The name and value of each attribute must be configured. You can click Delete to delete no longer used attributes.

The following are some examples:

connectTimeout=360000 and socketTimeout=360000: When a large amount of data needs to be migrated or the entire table is retrieved using query statements, the migration fails due to connection timeout. In this case, you can customize the connection timeout interval (ms) and socket timeout interval (ms) to prevent failures caused by timeout.

hive.server2.idle.operation.timeout=360000: To prevent Hive migration jobs from being suspended for a long time, you can customize the operation timeout period (ms).

hive.storeFormat=textfile: During data migration from a relational database to Hive, tables in ORC format are automatically created by default. If you want textfile or parquet tables to be created, add hive.storeFormat=textfile or hive.storeFormat=parquet.

fs.defaultFS=obs://hivedb: If the interconnected MRS Hive uses decoupled storage and compute, you can use this configuration to achieve better compatibility.

FusionInsight Hive¶

The FusionInsight Hive link is applicable to data migration of FusionInsight HD in the local data center. You must use Direct Connect to connect to FusionInsight HD.

Table 2 describes related parameters.

Parameter | Description | Example Value |

|---|---|---|

Name | Link name, which should be defined based on the data source type, so it is easier to remember what the link is for | hivelink |

Manager IP | IP address of FusionInsight Manager | 127.0.0.1 |

Manager Port | Port number of FusionInsight Manager | 28443 |

CAS Server Port | Port number of the CAS server used to connect to FusionInsight | 20009 |

Authentication Method | Authentication method used for accessing the cluster:

| SIMPLE |

HIVE Version | Hive version | HIVE_3_X |

Username | Username used for logging in to FusionInsight Manager. | cdm |

Password | Password used for logging in to FusionInsight Manager |

|

OBS storage support | The server must support OBS storage. When creating a Hive table, you can store the table in OBS. | No |

AK | This parameter is mandatory when OBS storage support is enabled. The account corresponding to the AK/SK pair must have the OBS Buckets Viewer permission. Otherwise, OBS cannot be accessed and the "403 AccessDenied" error is reported. You need to create an access key for the current account and obtain an AK/SK pair.

|

|

SK |

| |

Run Mode | This parameter is used only when the Hive version is HIVE_3_X. Possible values are:

| EMBEDDED |

Use Cluster Config | You can use the cluster configuration to simplify parameter settings for the Hadoop connection. | No |

Cluster Config Name | This parameter is valid only when Use Cluster Config is set to Yes. Select a cluster configuration that has been created. For details about how to configure a cluster, see "DataArts Migration" > "Managing Links" > "Managing Cluster Configurations" in User Guide. | hive_01 |

Click Show Advanced Attributes, and then click Add to add configuration attributes of other clients. The name and value of each attribute must be configured. You can click Delete to delete no longer used attributes.

The following are some examples:

connectTimeout=360000 and socketTimeout=360000: When a large amount of data needs to be migrated or the entire table is retrieved using query statements, the migration fails due to connection timeout. In this case, you can customize the connection timeout interval (ms) and socket timeout interval (ms) to prevent failures caused by timeout.

hive.server2.idle.operation.timeout=360000: To prevent Hive migration jobs from being suspended for a long time, you can customize the operation timeout period (ms).

Apache Hive¶

The Apache Hive link is applicable to data migration of the third-party Hadoop in the local data center or ECS. You must use Direct Connect to connect to Hadoop in the local data center.

Table 3 describes related parameters.

Parameter | Description | Example Value |

|---|---|---|

Name | Link name, which should be defined based on the data source type, so it is easier to remember what the link is for | hivelink |

URI | NameNode URI | hdfs://hacluster |

Hive Metastore | Hive metadata address. For details, see the hive.metastore.uris configuration item. Example: thrift://host-192-168-1-212:9083 |

|

Authentication Method | Authentication method used for accessing the cluster:

| SIMPLE |

Hive Version | Hive version | HIVE_3_X |

IP and Host Name Mapping | If the Hadoop configuration file uses the host name, configure the mapping between the IP address and host name. Separate the IP addresses and host names by spaces and mappings by semicolons (;), carriage returns, or line feeds. |

|

OBS storage support | The server must support OBS storage. When creating a Hive table, you can store the table in OBS. | No |

AK | This parameter is mandatory when OBS storage support is enabled. The account corresponding to the AK/SK pair must have the OBS Buckets Viewer permission. Otherwise, OBS cannot be accessed and the "403 AccessDenied" error is reported. You need to create an access key for the current account and obtain an AK/SK pair.

|

|

SK |

| |

Run Mode | This parameter is used only when the Hive version is HIVE_3_X. Possible values are:

| EMBEDDED |

Use Cluster Config | You can use the cluster configuration to simplify parameter settings for the Hadoop connection. | No |

Cluster Config Name | This parameter is valid when Use Cluster Config is set to Yes or Authentication Method is set to KERBEROS. Select a cluster configuration that has been created. For details about how to configure a cluster, see "DataArts Migration" > "Managing Links" > "Managing Cluster Configurations" in User Guide. | hive_01 |

Hive JDBC URL | URL for connecting to Hive JDBC. By default, anonymous users are used. |

|

Click Show Advanced Attributes, and then click Add to add configuration attributes of other clients. The name and value of each attribute must be configured. You can click Delete to delete no longer used attributes.

The following are some examples:

connectTimeout=360000 and socketTimeout=360000: When a large amount of data needs to be migrated or the entire table is retrieved using query statements, the migration fails due to connection timeout. In this case, you can customize the connection timeout interval (ms) and socket timeout interval (ms) to prevent failures caused by timeout.

hive.server2.idle.operation.timeout=360000: To prevent Hive migration jobs from being suspended for a long time, you can customize the operation timeout period (ms).