Failed to Restart a Container¶

On the details page of a workload, if an event is displayed indicating that the container fails to be restarted, perform the following operations to locate the fault:

Log in to the node where the abnormal workload is located.

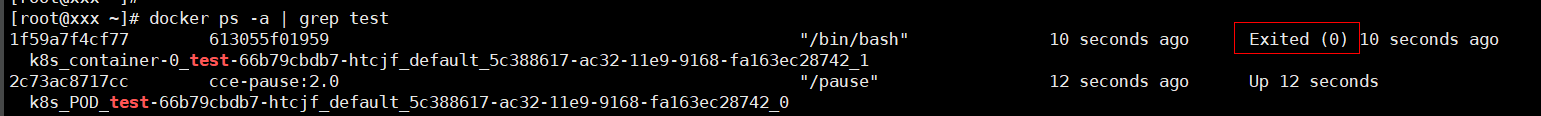

Check the ID of the container where the workload pod exits abnormally.

docker ps -a | grep $podName

View the logs of the corresponding container.

docker logs $containerID

Rectify the fault of the workload based on logs.

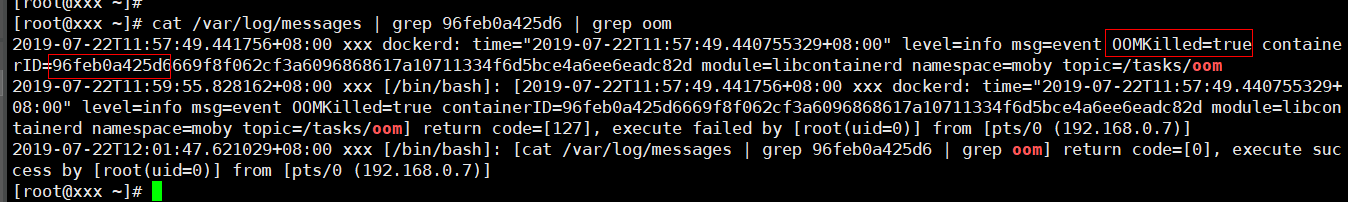

Check the error logs.

cat /var/log/messages | grep $containerID | grep oom

Check whether the system OOM is triggered based on the logs.

Fault Locating¶

Check Item 1: Whether There Are Processes that Keep Running in the Container

Check Item 4: Whether the Upper Limit of Container Resources Has Been Reached

Check Item 5: Whether the Container Disk Space Is Insufficient

Check Item 6: Whether the Resource Limits Are Improperly Set for the Container

Check Item 7: Whether the Container Ports in the Same Pod Conflict with Each Other

Check Item 8: Whether the Container Startup Command Is Correctly Configured

Check Item 1: Whether There Are Processes that Keep Running in the Container¶

Log in to the node where the abnormal workload is located.

View the container status.

docker ps -a | grep $podName

Example:

If no running process in the container, the status code Exited (0) is displayed.

Check Item 2: Whether Health Check Fails to Be Performed¶

The health check configured for a workload is performed on services periodically. If an exception occurs, the pod reports an event and the pod fails to be restarted.

If the liveness-type (workload liveness probe) health check is configured for the workload and the number of health check failures exceeds the threshold, the containers in the pod will be restarted. On the workload details page, if K8s events contain Liveness probe failed: Get http..., the health check fails.

Solution

On the workload details page, choose Upgrade > Advanced Settings > Health Check to check whether the health check policy is properly set and whether services are normal.

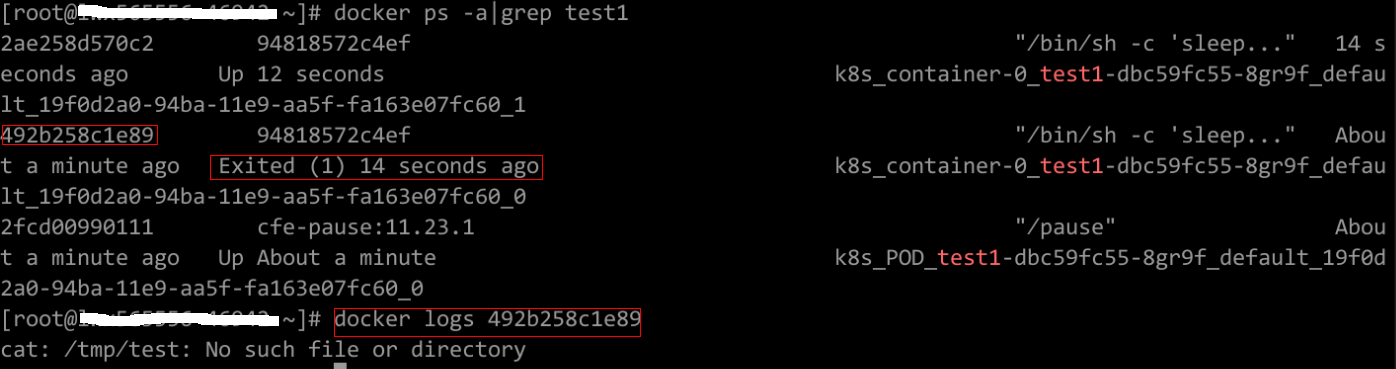

Check Item 3: Whether the User Service Has a Bug¶

Check whether the workload startup command is correctly executed or whether the workload has a bug.

Log in to the node where the abnormal workload is located.

Check the ID of the container where the workload pod exits abnormally.

docker ps -a | grep $podName

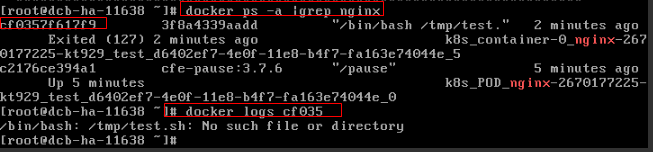

View the logs of the corresponding container.

docker logs $containerID

Note: In the preceding command, containerID indicates the ID of the container that has exited.

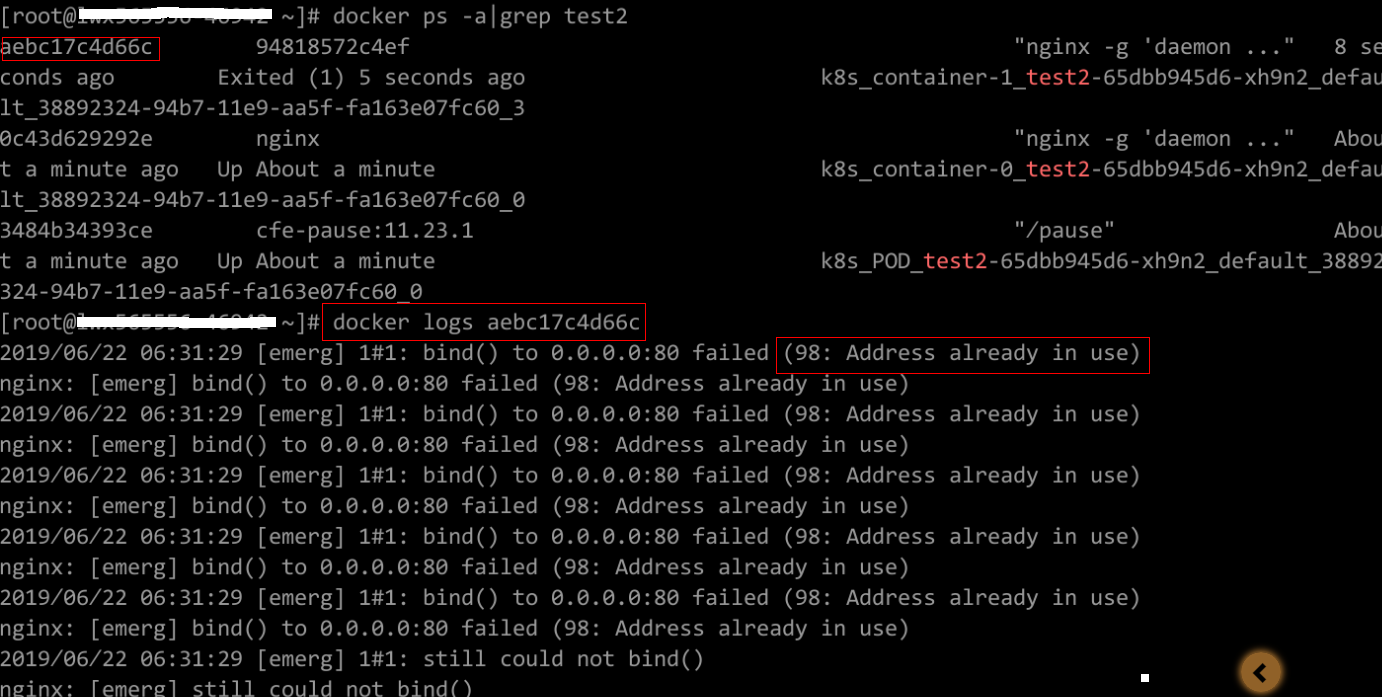

Figure 1 Incorrect startup command of the container¶

As shown above, the container fails to be started due to an incorrect startup command. For other errors, rectify the bugs based on the logs.

Solution: Re-create a workload and configure a correct startup command.

Check Item 4: Whether the Upper Limit of Container Resources Has Been Reached¶

If the upper limit of container resources has been reached, OOM will be displayed in the event details as well as in the log:

cat /var/log/messages | grep 96feb0a425d6 | grep oom

When a workload is created, if the requested resources exceed the configured upper limit, the system OOM is triggered and the container exits unexpectedly.

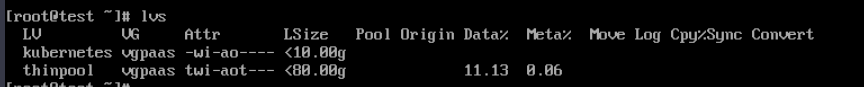

Check Item 5: Whether the Container Disk Space Is Insufficient¶

The following message refers to the Thin Pool disk that is allocated from the Docker disk selected during node creation. You can run the lvs command as user root to view the current disk usage.

Thin Pool has 15991 free data blocks which is less than minimum required 16383 free data blocks. Create more free space in thin pool or use dm.min_free_space option to change behavior

Solution

Release used disk space.

docker rmi -f `docker images | grep 20202 | awk '{print $3}'`Expand the disk capacity. For details, see the method of expanding the data disk capacity of a node.

Check Item 6: Whether the Resource Limits Are Improperly Set for the Container¶

If the resource limits set for the container during workload creation are less than required, the container fails to be restarted.

Back-off restarting failed container

Solution

Modify the container specifications.

Check Item 7: Whether the Container Ports in the Same Pod Conflict with Each Other¶

Log in to the node where the abnormal workload is located.

Check the ID of the container where the workload pod exits abnormally.

docker ps -a | grep $podName

View the logs of the corresponding container.

docker logs $containerID

Rectify the fault of the workload based on logs. As shown in the following figure, container ports in the same pod conflict. As a result, the container fails to be started.

Figure 2 Container restart failure due to a container port conflict¶

Solution

Re-create the workload and set a port number that is not used by any other pod.

Check Item 8: Whether the Container Startup Command Is Correctly Configured¶

The error messages are as follows:

Solution

Log in to the CCE console. On the workload details page, click Upgrade > Advanced Settings > Lifecycle to see whether the startup command is correctly configured.